Presentation of the researchers involved and their role in the ARS project.

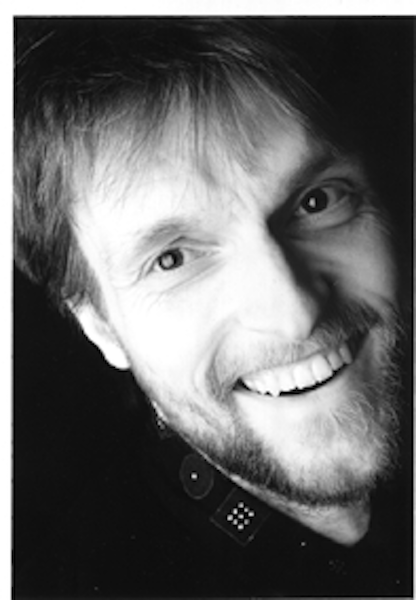

Axel Roebel

Analysis/Synthesis Team (STMS Lab UMR 9912 — IRCAM/CNRS/Sorbonne University)

Axel Roebel is research director at IRCAM and head of the Sound Analysis-Synthesis team.

His research focuses on signal processing algorithms for speech, music, and sound, notably for artistic applications. He is one of the main authors of SuperVP, an enhanced phase vocoder for music and speech processing that has been integrated in numerous professional audio tools. He has worked on parametric speech and singing signal representation, as for example the signal processing backend PaN in the singing voice synthesizer ISiS (projet ANR ChaNTeR). More recently, he has become interested in building signal processing algorhtms using deep learning.

Axel Roebel is coordinator of the ARS project and will particularly supervise the research on singing voice analysis and transformation using deep learning based algorithms.

Céline Chabot-Canet

Passages XX-XXI (EA 4160) / Université Lyon 2

Céline Chabot-Canet is a Lecturer in musicology at the University of Lyon 2 (Passages XX-XXI). Her research focuses on popular and contemporary music, computer music and the analysis of vocal interpretation in recorded music. She is the author of the PhD thesis Interpretation, phrasing and vocal rhetoric in French chanson since 1950: expliciter l’indicible de la voix (2013), in which she develops a method of analysis of vocal interpretation based on transdisciplinarity and the association between human sciences and spectral analysis. She is the author of multiple articles on popular music, in particular on voice, and of the book Léo Ferré: une voix et un phrasé emblématiques (IASPM Prize 2008). She works in collaboration with the analysis-synthesis team of IRCAM on the description and modeling of interpretative styles in singing: ANR ChanTeR project (Digital singing with real-time control, 2013-2017) and ANR ARS project (Analysis and tRansformation of Singing Style, 2020-2024).

Gaël Martinet

Flux Software Engineering

Gaël Martinet is founder, CEO & Head Software Engineering @ Flux Software Engineering.

Since the years 2000, and with the company’s birth in 2006, Gael has developed unique cutting edge software for audio analysis, dynamics processing and spectral balance.

He has collaborated with research teams at IRCAM for a very long time and this collaboration has led to the release of a complete range of innovating products: «IRCAM Tools», and especially FLUX::’s spearhead: «SPAT REVOLUTION», for an «Immersive Audio Revolution». With this collaboration FLUX:: was the first brand to get a partnership with the Research Institute. Currently, Gaël focuses on developing intuitive and technically innovative audio software tools, and on improving the existing range of FLUX::’s products.

Christophe d’Alessandro

Lutheries-Acoustique-Musique team at Institut Jean Le Rond d´Alembert Institute (UMR CNRS 7190) – Sorbonne Université

Christophe d’Alessandro is a researcher and musician, director of research at the CNRS, head of the Lutheries-Acoustique-Musique team at Institut Jean Le Rond d´Alembert Institute (UMR CNRS 7190) and titular organist of the church Sainte-Élisabeth in Paris. Trained in pure mathematics and music, he completed his PhD in computer science at Sorbonne Université and joined the CNRS in 1989. He published over 250 articles in journals, conference proceedings or book chapters, supervised 25 doctorates and was invited as visiting researcher in Canada, India, Japan, Argentina. His research interests include speech and voice sciences, computer music, music acoustics and organology. Trained in harpsichord, organ, and composition, he was appointed organist of Sainte-Élisabeth in 1987 and recorded for disk, radio and television programs. His musical research approach combines improvisation, composition and creation of instruments, particularly in the fields of organ, live electronics, and performative voice synthesis.

Within the ARS project, Christophe d’Alessandro works on the gestural control of voice synthesis and digital audio effects for performance and research on vocal style.

Frederik Bous

Analysis/Synthesis Team (STMS Lab UMR 9912 — IRCAM/CNRS/Sorbonne University)

Frederik Bous is a PhD Student at Sorbonne University and a laureate of EDITE’s PhD scholarship working at IRCAM in the Sound Analysis-Synthesis team.

In his PhD with the topic parametric speech synthesis with deep neural networks, he investigates meaningful parametric representations for speech that allow transformation and synthesis of the human voice. The PhD extends his research carried out for his Master thesis where he worked on singing synthesis.

Nicolas Obin

Analysis/Synthesis Team (STMS Lab UMR 9912 — IRCAM/CNRS/Sorbonne University)

Nicolas OBIN is associate professor at the Faculty of Sciences of Sorbonne Université and researcher in the Sound Analysis and Synthesis team. He holds a PhD in computer sciences on the modeling of speech prosody and speaking style for text-to-speech synthesis (2011) for which he obtained the best PhD thesis award from La Fondation Des Treilles in 2011.

He is conducting research in the fields of audio signal processing, machine learning, and statistical modeling of sound signals with specialization on speech processing. His main area of research is the generative modeling of the expressivity in spoken and singing voices, with application to various fields such as speech synthesis, conversational agents, and computational musicology.

He is actively involved in promoting digital science and technology for arts, culture, and heritage. In particular, he collaborated with renowned artists (Georges Aperghis, Philippe Manoury, Roman Polansky, Philippe Parreno, Eric Rohmer, André Dussolier), and helped to reconstitute the digital voice of personalities, like the artificial cloning of André Dussolier’s voice (2011), the short-film Marilyn (P. Parreno, 2012) and Juger Pétain documentary (R. Saada, 2014).

Yann Teytaut

Analysis/Synthesis Team (STMS Lab UMR 9912 — IRCAM/CNRS/Sorbonne University)

Yann Teytaut is a Ph.D. Student at IRCAM in the Sound Analysis-Synthesis team.

He previously was a Master’s Student at IRCAM following the ATIAM program (Acoustics, Signal processing and Computer science applied to Music), which confirmed his interest for research in music technology.

In the ARS project, Yann Teytaut will investigate and elaborate deep learning based algorithms for analysis and transformation of musical singing voice style.

Judith Deschamps

Laureat of the Artistic Research Residency Program 2020/21 at IRCAM.

Judith Deschamps is a multidisciplinary artist, and her project « Quell’usignolo che innamorato » : The Resurgence of an Artificial and Deeply Plural Voice draws on advances in vocal signal processing and deep learning to recreate a song that the Italian castrato Farinelli would have performed for King Philip V of Spain to alleviate his melancholy.

Conducted in collaboration with the Analysis-Synthesis team, the residency will experiment with the singing voice conversion technologies developped in the ARS project with the objective to create a hybrid chant, incorporating the specificities of 4 real singing voices.

Daniel Hernán MOLINA-VILLOTA

Lutheries-Acoustique-Musique team at Institut Jean Le Rond d´Alembert Institute (UMR CNRS 7190) – Sorbonne Université.

Daniel Hernán Molina Villota is a PhD candidate at LAM team (Lutherie-Acoustique-Musique) – Institut Jean Le Rond D’Alembert at Sorbonne Université.

He holds a Master on Mechanics: Acoustics (Université Paris-Saclay + Institut Polytechnique de Paris), a Master on Computer Music (Université Jean Monnet member of Université de Lyon) and a Master on Acoustical Engineering (Universidad de Málaga – Spain).

Within the ARS project, Daniel works on interactive digital audio effects and gestural control for vocal style in real time.

Antoine Petit

Passages XX-XXI (EA 4160) / Université Lyon 2.

Antoine Petit is a Ph.D candidate at Université Lumière Lyon 2 (Passages XX-XXI). Expanding from the theoretical foundations laid out in his master’s thesis, his current research investigates the stylistic and rhetorical features of contemporary anglophone recorded pop songs as heard from a musical standpoint. Through a combination of multiple analytical methodologies fused within a semiotic framework, his work, focusing on the singing voice, attempts at building an exhaustive view of what a song musically is in order to understand what it is that makes it “it” and what it is that enables it to make sense for us.

Within the ARS project, Antoine Petit will provide precise descriptions of vocal performances in recorded songs, including their multiple relationships with technology.

Caio Lang

Analysis/Synthesis Team (STMS Lab UMR 9912 — IRCAM/CNRS/Sorbonne University)

Caio Lang is an engineering student at ENSTA Paris (France) and Unicamp (Brazil), as well as a musician. He’s interested in the interfaces between music and technology, especially regarding the expressive potentialities of the human voice.

Caio joins the ARS project as a research intern in the Sound Analysis-Synthesis team, assisting in the creation and preprocessing of the project’s singing style database.